conferences

My notes from conference sessions I have attended.

AgileIndy 2024

October 18, 2024

Indianapolis

Opening Keynote: Build to Learn, Build to Earn

with Jeff Patton

According to Accelerate, teams that score high on DORA metrics are 2x more likely to meet their commercial and business goals.

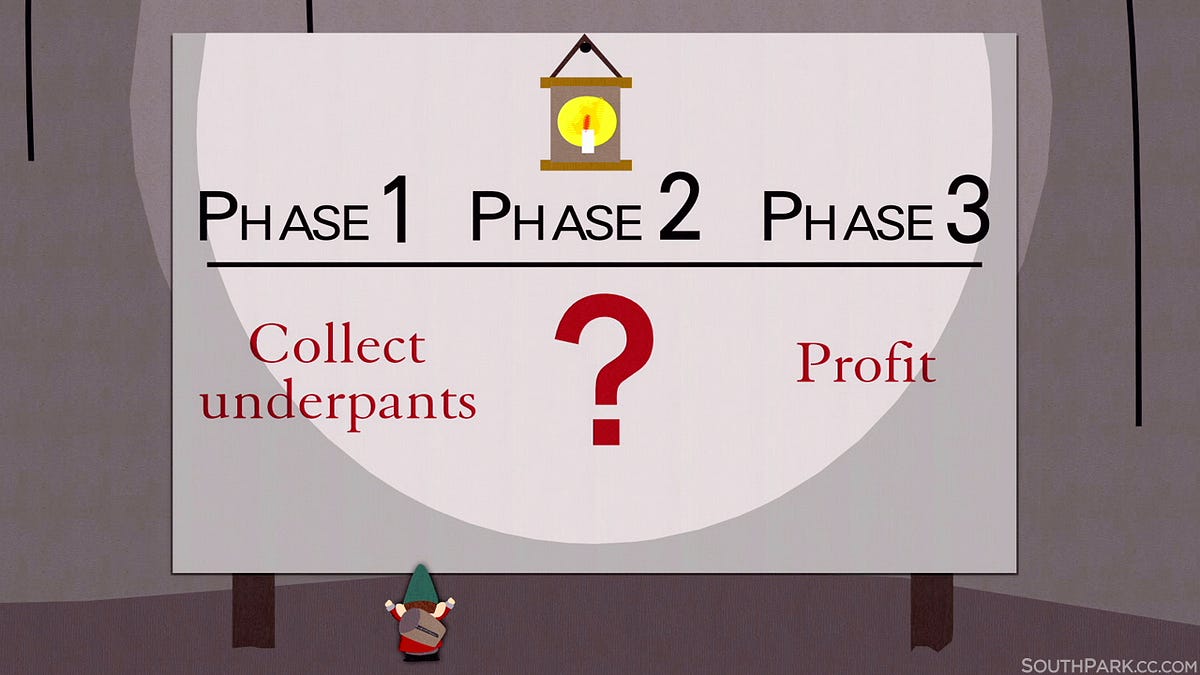

“Agile” has become hyperfocused on collecting underpants faster.

Traditional (physical) products improve through obsolescence and replacement. Software products improve in place.

Users aren’t necessarily choosers–most Jira users can’t opt out, it’s what their org has chosen to use. (A corrallary or perversion of the network effect?)

| Phase 1 | Phase 2 | Phase 3 | |

|---|---|---|---|

| (do we even understand the problem?) | ideas | customer value | profit |

| Vision and Strategy | output | outcome | impact |

| (bad “Agile” gets stuck here) |

We have a bias that our ideas are right.

Startup incubators are full of positive, bullish people, 90% of whom won’t have a job a year from now.

IMPACT > velocity

MINIMIZE output, MAXIMIZE outcomes

(That’s what the learning is for)

Some questions to answer (with evidence!)

1. Do you understand the problem?

2. Will they want your solution?

3. Can you build it predictably?

4. Can people easily learn to use it?

Usability test your idea w/paper mockups–it’s a cheap way to learn!

- Visual fidelity is LOW

- Data fidelity is OK

- Functional fidelity is HIGH

5. Will they actually use it?

Build it, but not to scale.

“Fake it, Build it, Ship it, Tweak it” (Spotify, 2014)

Release to learn (MVP)

✨ NAIL IT BEFORE YOU SCALE IT

Tripadvisor’s “404 Test” (2011)

A call to action shown on the site (temporarilty, for an hour or a day) would redirect to a 404 page, but log the click.

AKA a “painted door”

(Does this indicate demand, or merely curiosity?)

Avoiding False Starts with Artificial Intelligence

with Rob Herbig and Jordan Thayer

Our intuitions about AI are wrong.

AI is generally capable of doing human things

“I can pick up this glass of water without breaking it, why can’t the robot simply do the same?”

Automation is ALL or NOTHING

It’s not valuable or feasible to automate an entire stream of work/value, only parts of it. Automation is a sliding scale.

Models are impartial

For example, in training a model to distinguish between a husky and a wolf, the model learned instead to identify the background of the photo—wolves were typically in front of woods and snow, huskies in backyards or houses.

There is bias in our data.

AI means human-like intelligence

In a 1997 chess match with Kasparov, Deepblue made a defensive move while it was ahead. This was so counterintuitive it threw Kasparov off his game and he lost.

Why would it make that move? What does it know that I’m not seeing?

Deepblue’s programmers identified the move as a bug.

In a 2016 Go (a more challenging game for AI) game, DeepMind made an unexpected move that similarly threw Lee Sedol. After he lost, he and other Go masters changed how they played as a result.

This whole thing is a cat and mouse game. Later, an amateur player beat DeepMind… by using an AI-generated strategy.

Closing Keynote

with Pete Anderson and Josh VandeWiele

Product Culture has humility at its center: “I’m probably wrong”

A product is the thing that we create that adds value for our customers. (co-created definition w/David Hussman)

“We are environmentalists–transforming the environment [of work] is more impactful than just focusing on teams.”

The pilot/tiger team approach is better for grassroots transformation efforts. For large-scale transformations, focus on removing all the stuff that stops teams from shipping.

As far as consulting business models, embedded coaching is out, and selling a retainer to be an advisor to leaders and coaches is in.